Custom Segmentation

Lens Studio allows you to create a custom segmentation mask using a ML model through the ML Component. This guide will walk you through creating custom segmentation model for Pizzas and building a Lens experience which makes the pizza appear hot.

Custom Segmentation asset is available in the Lens Studio Asset Library. Import the asset to your project, create new Orthographic camera and place the prefab under it.

Skip to the Importing Your Model section if you have the ML model ready.

Skip to the Customizing Lens section if you want to use the existing pizza segmentation texture.

Creating an ML Model

To learn more about Machine Learning and Lens Studio, please visit the ML Overview page.

To train a segmentation ML model, you will need the following:

- Machine learning training code: The code that describes how the model is trained. This is sometimes referred to as a notebook. You can download our example notebook if you want to follow along.

- Data set: A collection of data that our code will use to learn from (in this case we will use the COCO data set).

This dataset comes with multiple labels that you can modify in order to change what type of mask is used. You can try creating different ones.

Training Your Model

There are many different ways you can train your model. For our example, we will use Google Colaboratory. To see other ways of training, take a look at the ML Frameworks page of the guide section.

Head over to Google Colaboratory, select the Upload tab, and drag the python notebook into the upload area.

The notebook is well documented with information about what each section of the code is doing. Take a look at the notebook to learn more about the training process itself!

Once your notebook has been opened, you can choose which labels you want the model to segment out. Then, you can run the code by choosing Runtime > Run All in the menu bar. This process may take a while to run, as creating a model is computationally intensive.

Downloading your Model

You can scroll to the Train Loop section of the notebook to see how your ML model is coming along.

Once you are happy with the result, you can download your .ONNX model.

When using a data set to train your model, make sure that you adhere to the usage license of that dataset.

Importing your Model

Now that we have our model, we’ll import it into Lens Studio.

You can drag and drop your .ONNX file into the Asset Browser panel to bring it into Lens Studio.

Please have the Custom Segmentation asset imported and added to Orthographic Camera.

To use imported model navigate to Scene Hierarchy panel, and under Custom Segmentation object select ML Component object. Then, in the Inspector panel, click on the field next to Model, and then in the pop up window, choose your newly imported model.

Next, set model input texture to Screen Crop Texture or Device Camera Texture. Then click on the Output Texture field and create new Proxy Texture. Proxy Texture asset is a texture that can be used in materials and effects.

If you have created a new proxy texture, make sure to set it as the input for the materials provided in this example to see the effects.

Customizing your Lens Experience

You can see in the ML Component, that it has an Output Texture of Segmentation Texture. This Texture can be used in any way that textures are used.

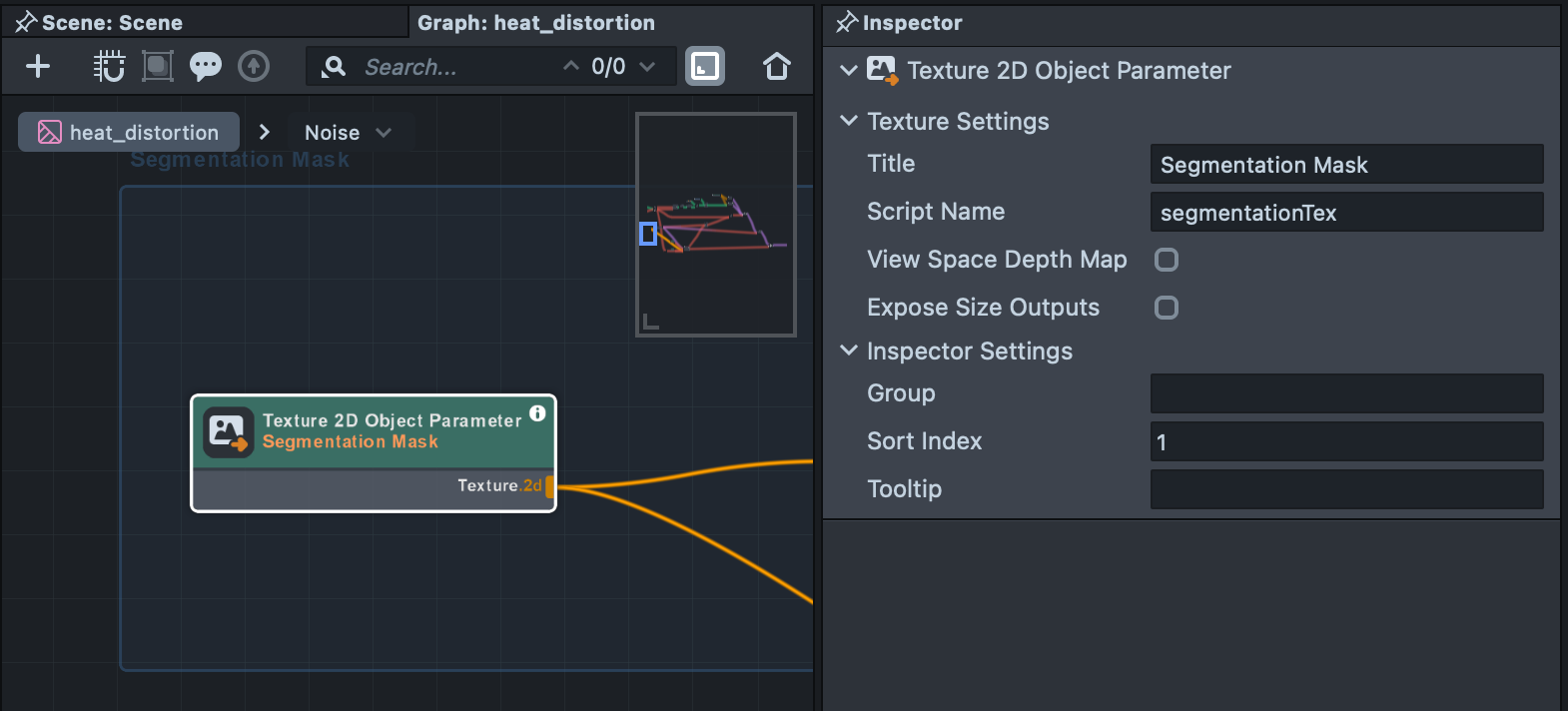

Using the output texture in material editor

In this example the texture is being used as input to the Material Editor to create the heat effect.

The segmentation texture is passed in to the material using the Texture 2D Object Parameter node to allow your material to access inputs:

Please visit the Material Editor guides for more information.

Play around with some of the parameters of Heat Distortion material and check out the graph to see how it works!

The Heat Distortion material uses the segmentation mask to determine where the area should be grayscale (pixel is not pizza) and to generate the smoke noise that appears on top of the pizza.

Using Segmentation Texture to cut things out

You can also use the Segmentation Texture directly on a camera to mask what it renders.

For example, let's say we added a color correction object to make our image be sepia toned.

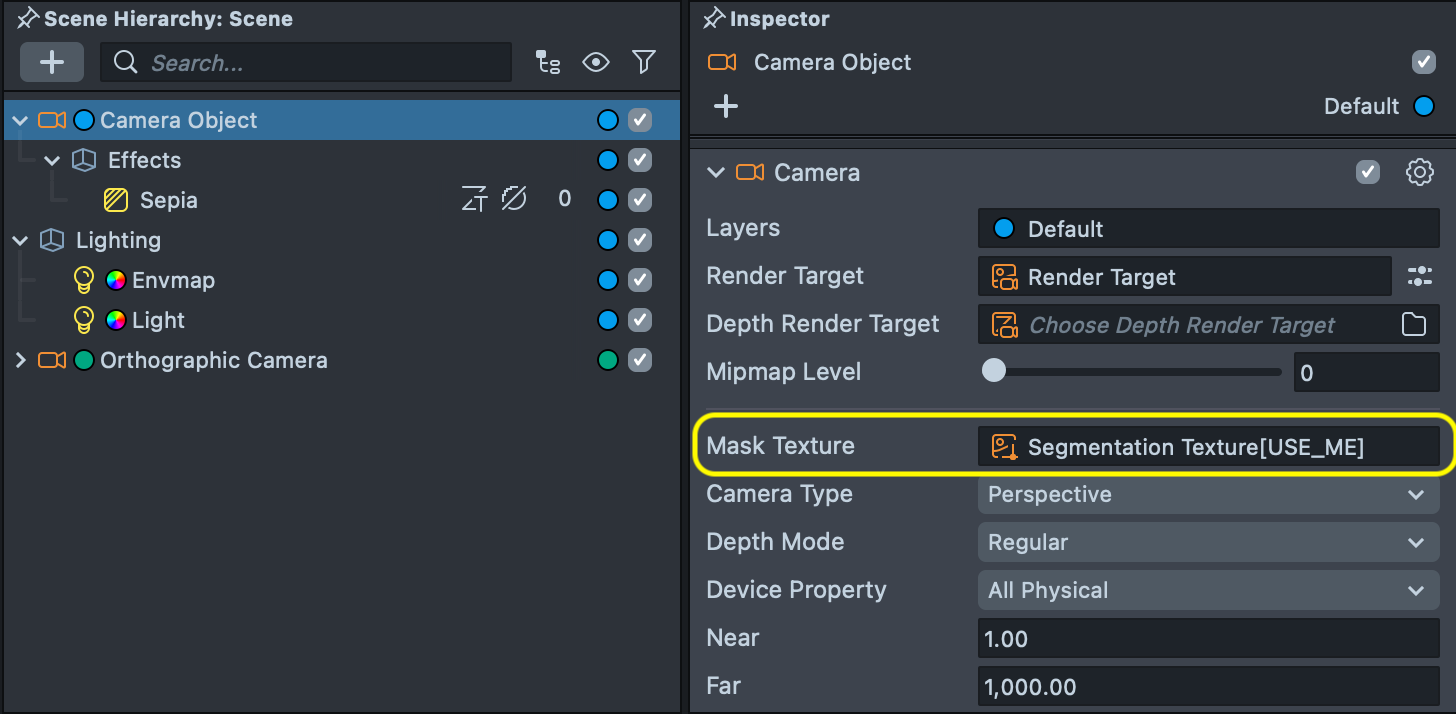

Then, you can select the Camera object, and use the Segmentation Texture from the ML Component as the Camera’s Mask Texture.

Please visit the Fullscreen Segmentation guide to learn about how you can use segmentation textures to cut out parts of an object or image.

Previewing Your Lens

You’re now ready to preview your Lens! To preview your Lens in Snapchat, follow the Pairing to Snapchat guide.